How NICs work ? a quick dive !

I’ve written this post as a draft sometime ago, but forgot to post it. The reason I looked into it was to find out how DPDK physically works as the OS/Device level and how it bypasses the network stack. So, when you attach a PCIe NIC to your Linux server, you expect traffic will flow

PCI passthrough: Type-PF, Type-VF and Type-PCI

Passthrough has became more and more popular with time. It started initially for simple PCI device assignment to VMs and then grew to be part of high performance network realm in the Cloud such as SR-IOV, Host-level DPDK and VM-Level DPDK for NFV. In Openstack, if you need to passthrough a device on your compute

VNI Ranges: What do they do ?

Deployment tools for Openstack have become very popular, including the very well known Openstack-Ansible. It makes deploying a Cloud an easy task, at the expense of losing access to the insights of “Behind the Scenes” of your your Cloud deployment. If you have had to configure neutron manually, you would have come across the following

Port security in Openstack

Openstack Neutron provides by default some protections for your VMs’ communications, those protections verify that VMs can not impersonate other VMs. You can easily see how it does that by checking the flow rules in an OVS deployment using: ovs-ofctl dump-flows br-int If you look for a certain qvo port (or the port number, depending

Private External Networks in Neutron

You might find yourself in a position where you need to restrict access by tenants to specific external networks. In Openstack there’s the notion that external networks are accessible by all tenants and anyone can attach their private router to it. This might not be the case if you want to only allow specific users

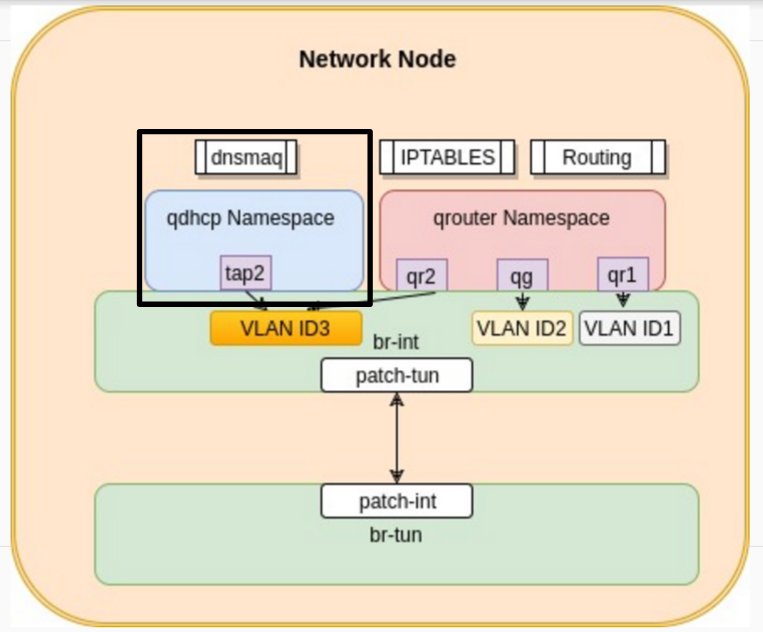

VM getting a DHCP address

DHCP requests are broadcast requests sent by the VM to its boradcast domain. If a DHCP server exists in this domain, it will respond back providing a DHCP IP lease following the DHCP protocol. In openstack, the same procedure is followed. A VM starts by sending its DHCP request to its boardcast domain which goes

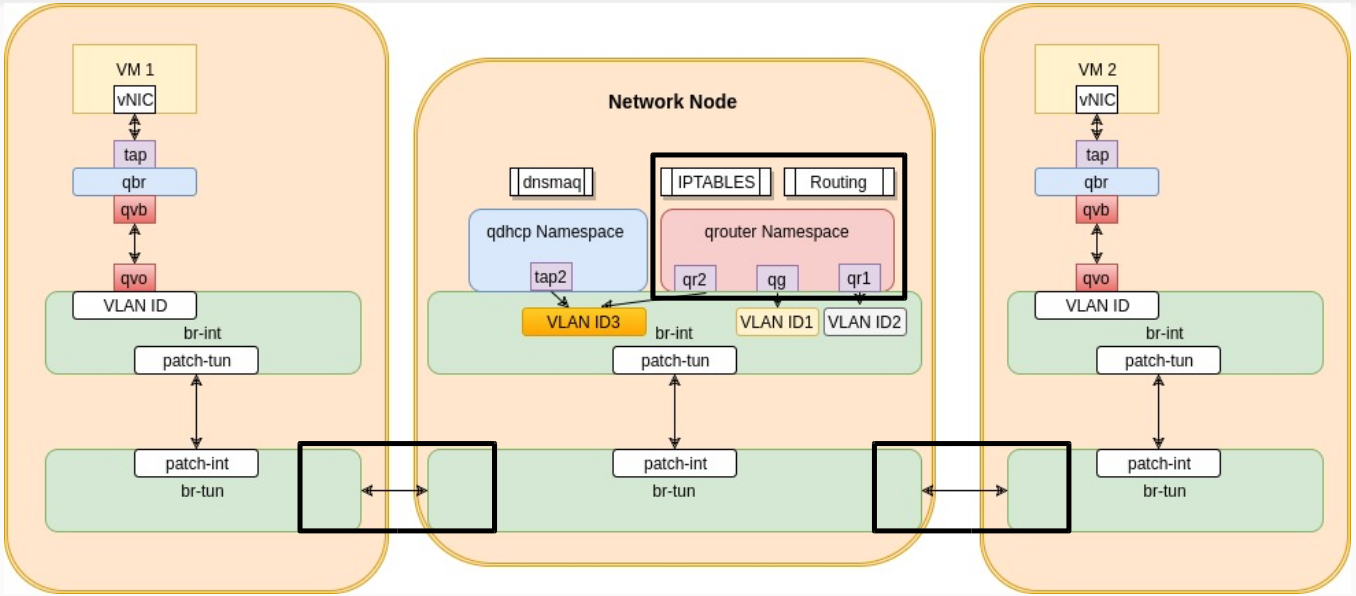

VM to VM communication: different networks

So far we have only spoken about VM communication when they belong to the same network. But what happens when the VM has to communicate with another VM on a different network. The common rule of networking is that changing networks requires routing. This is exactly what neutron does to allow those kinds of VMs

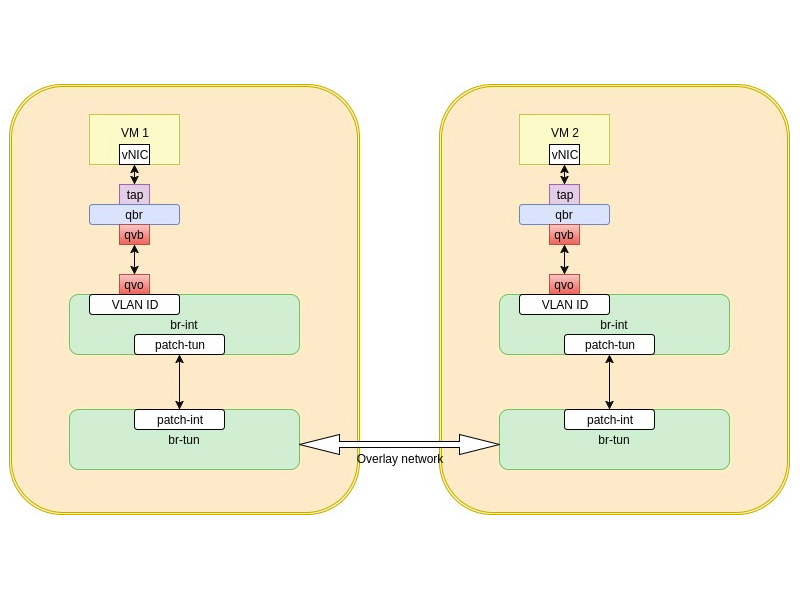

VM to VM communication, same network, different compute hosts

In the last post, we spoke about VM to VM communication when they belong to the same network and happen to get deployed to the same host. This is a good scenario, but in a big openstack deployment, it’s unlikely that all your VMs belonging to the same network will end up on the same

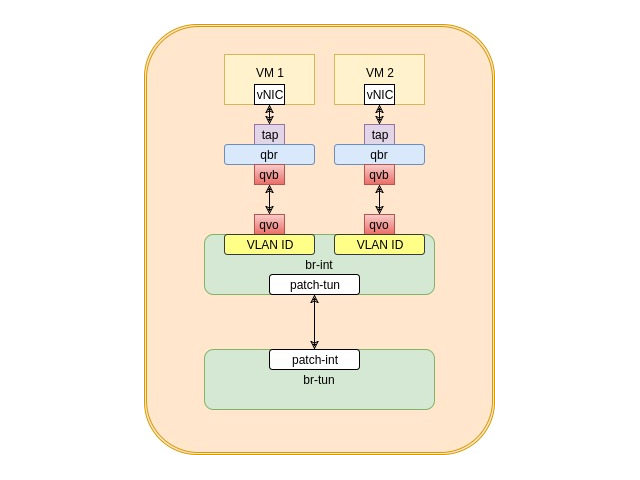

VM to VM communication: Same network & same compute host

In a physical world, machines communicate with each other without routers when they belong to the same network. This is the same case with openstack, VMs communicate over the same network without routers. When two VMs belonging to the same network happen to get deployed on the same compute host, their logical diagram looks like

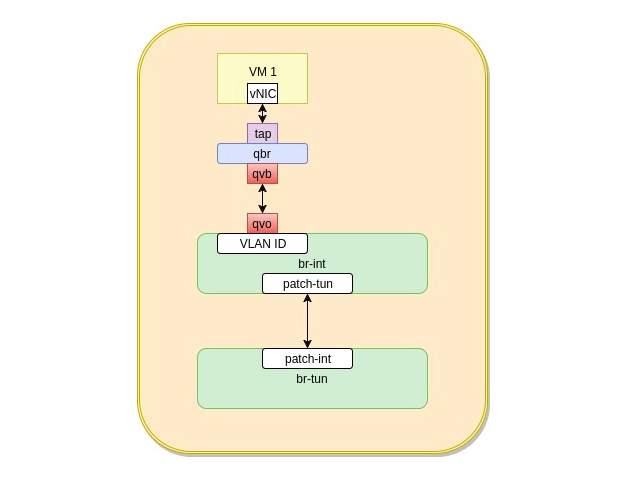

Traffic flows from an Openstack VM

As we mentioned in the last post, traffic flows through a set of Linux virtual devices/switches to reach its destination after leaving the VM. Outbound traffic goes downward while inbound traffic moves upwards. The flow of traffic from the VM goes through the following steps A VM generates traffic that goes through its internal vNIC