PCI passthrough: Type-PF, Type-VF and Type-PCI

Passthrough has became more and more popular with time. It started initially for simple PCI device assignment to VMs and then grew to be part of high performance network realm in the Cloud such as SR-IOV, Host-level DPDK and VM-Level DPDK for NFV.

In Openstack, if you need to passthrough a device on your compute hosts to the VMs, you will need to specify that in the nova.conf via the passthrough_whitelist and the alias directives under the [pci] category. A typical configuration of nova.conf on the controller node will look like that

[pci]

alias = { "vendor_id":"1111", "product_id":"1111", "device_type":"type-PCI", "name":"a1"}

alias = { "vendor_id":"2222", "product_id":"2222", "device_type":"type-PCI", "name":"a2"}

while on the compute host it, nova.conf will look like that

[pci]

alias = { "vendor_id":"1111", "product_id":"1111", "device_type":"type-PCI", "name":"a1"}

alias = { "vendor_id":"2222", "product_id":"2222", "device_type":"type-PCI", "name":"a2"}

passthrough_whitelist = [{"vendor_id":"1111", "product_id":"1111"}, {"vendor_id":"2222", "product_id":"2222"}]

Each alias represents a device that nova-scheduler will be capable of scheduling againist using the PciPassthroughFilter filter. The more devices you want to pass through, the more alias lines you will have to create.

Alias syntax is quite self explanatory. vendor_id is unique for the device vendor, product_id is unique per device, name is an identifier that you specify of this device. Both vendor_id and product_id can be obtained via the command

lspci -nnn

You can deduce the vendor and product ids from the output as follows

000a:00:00.0 PCI bridge [0000]: Host Bridge [1111:2222]

In this case, the vendor_id is 1111 and the product_id is 2222

But how about device_type in the alias definition ? . Well device_type can be one of three values: type-PCI, type-PF and type-VF

type-PCI is the most generic. What it does is pass-through the PCI card to the guest VM through the following mechanism:

- IOMMU/VT-d will be used for memory mapping and isolation, such that the Guest OS can access the memory structures of the PCI device

- No vendor driver will be loaded for the PCI device in the compute host OS

- The Guest VM will handle the device directly using the vendor driver

When a PCI device gets attached to a qemu-kvm instance, the libvirt definition for that instance will include a hostdev for that device, for example:

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x1111' bus='0x11' slot='0x11' function='0x1'/>

</source>

<address type='pci' domain='0x1111' bus='0x11' slot='0x1' function='0x0'/>

</hostdev>

The next two types are more interesting. They originated for SR-IOV capable devices, where the notion of Physical function “PF” and Virtual Functions “VF”. There’s a core difference with those two types than the type-PCI which is

- A PF driver is loaded for the SR-IOV device in the compute-host OS.

Let’s explain what the difference between type-VF and type-PF is, we will start with VFs first:

type-VF allows you to pass a Virtual Function, which is a lightweight PCIe device that has its own RX/TX queues in case of network devices. Your VM will be able to use the VF driver, provided by the vendor, to access the VF and deal with it as a regular device for IO. VFs generally have the same vendor_id as the hardware device vendor_id, but with a different product_id specified for the VFs.

type-PF on the other hand refers to a fully capable PCIe device, that can control the physical functions of an SR-IOV capable device, including the configuration of the Virtual functions. type-PF allows you to passthrough the PF to be controlled by the VMs. This is sometimes useful in NFV use-cases.

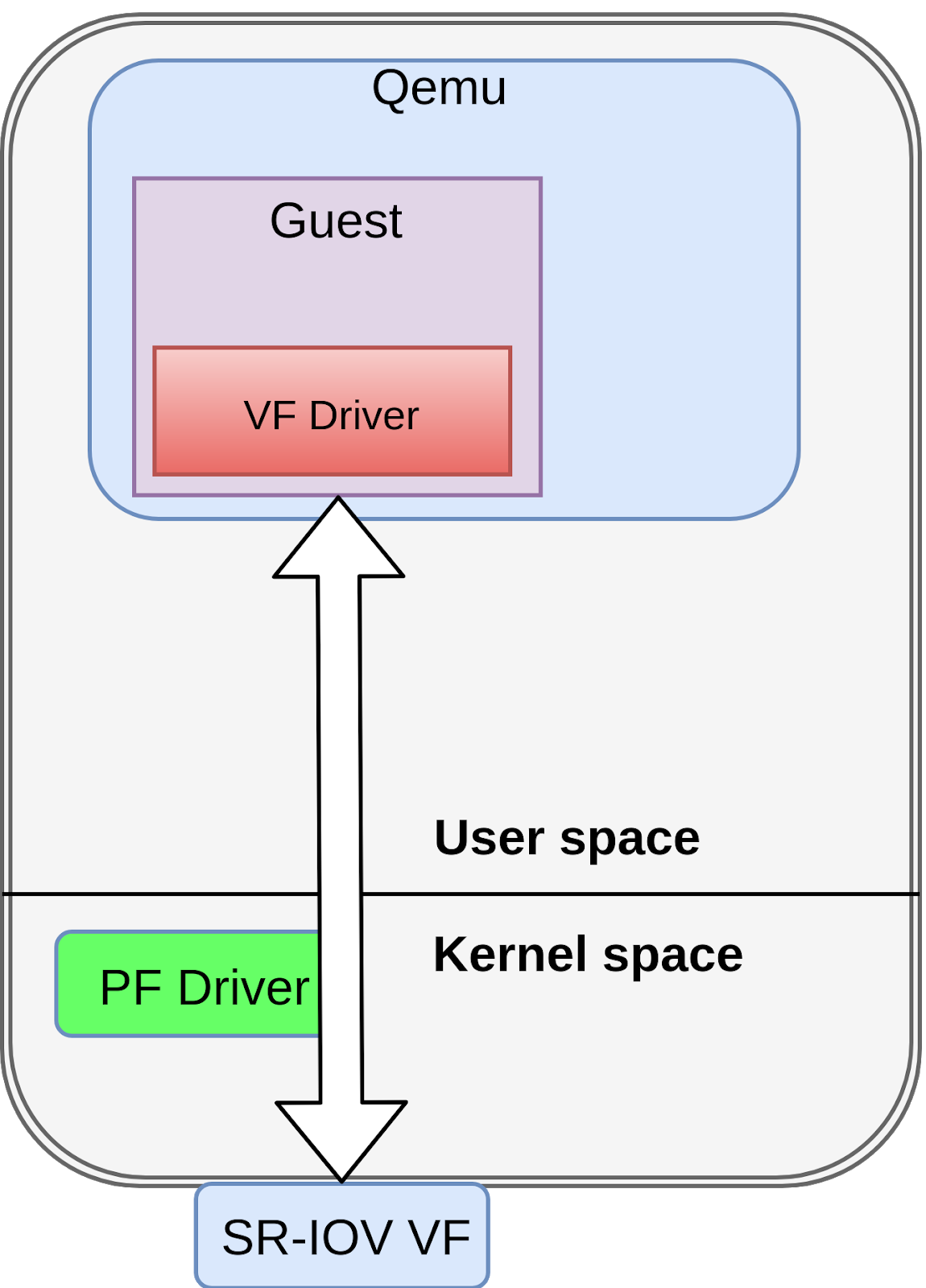

A simplified layout of PF/VF looks like this

PF driver is used to configure the SR-IOV functionality and partition the device into virtual functions accessed by the VM in userspace

A nice feature about nova-compute is that it does print out the Final resource view, which contains specifics of the passthroughed devices. It will look like that in the case of a PF passthrough

Final resource view: pci_stats=[PciDevicePool(count=2,numa_node=0,product_id='2222',tags={dev_type='type-PF'},vendor_id='1111')]

Which says there’r two devices in numa cell 0 with the specified vendor_id and product_id that are available for passthrough

In the case of VF passthrough:

Final resource view: pci_stats=[PciDevicePool(count=1,numa_node=0,product_id='3333',tags={dev_type='type-VF'},vendor_id='1111')]

In this case there’s only one VF with vendor_id 1111 and product_id 3333 that’s ready to be passthroughed on numa cell 0

The blueprint of PF passthrough type is here if you’r interested

https://blueprints.launchpad.net/nova/+spec/sriov-physical-function-passthrough

Good Luck !

Leave a Reply